To the editors:

On a subjective level, conscious perception seems to be smooth and continuous. The temporal order of events can be accurately perceived when the events in question are only a few milliseconds (ms) apart in time.1 That might seem to support a claim that the temporal resolution of conscious perception is a few milliseconds. But several authors have claimed that conscious perception comprises a series of temporal frames covering a much longer time span. Generally speaking, a frame is a body of perceptual information that is initiated as a whole at one moment, persists unchanging for whatever the proposed duration of the frame may be, and is then replaced by the next one.2

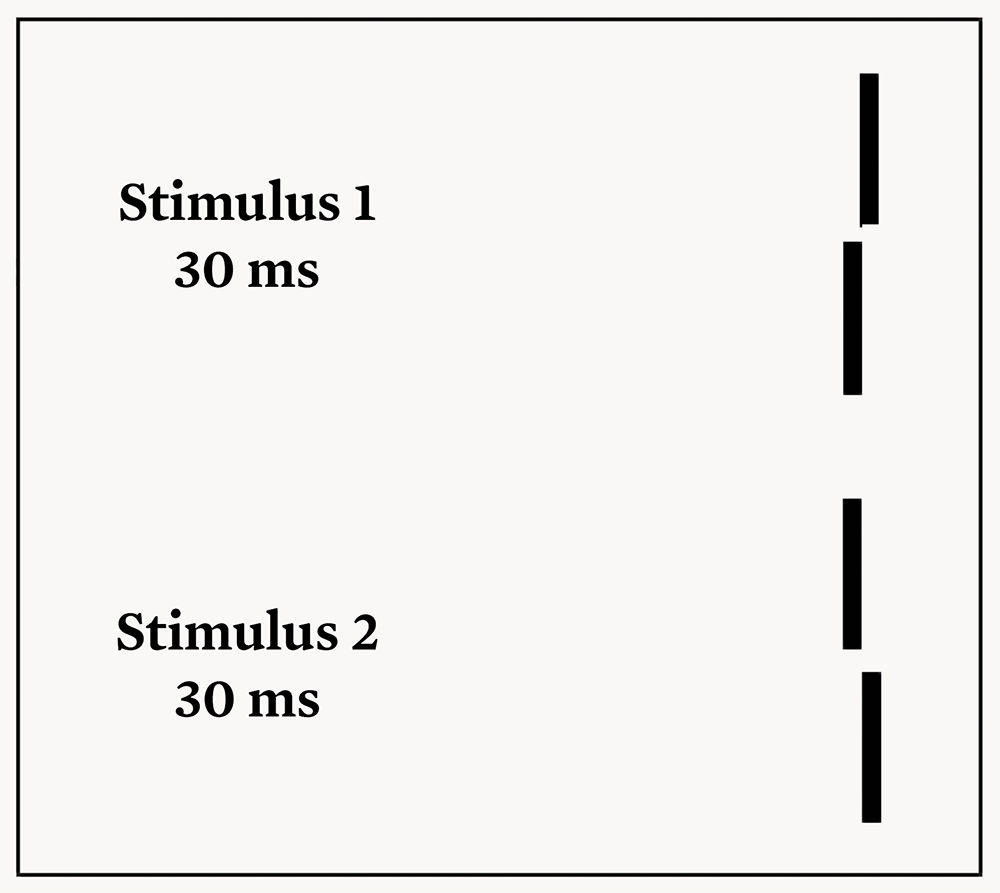

Imagine two vertical bars briefly presented one above the other. If one bar is horizontally offset from the other, this arrangement is termed a vernier. In a 2009 study, Frank Scharnowski et al. presented two verniers in rapid succession at the same retinotopic location with spatial offsets in opposite directions.3 Each vernier was presented for 30ms with no stimulus between intervals (figure 1). They found that the two verniers are not perceived as such. Instead a single vernier was perceived with an offset that is a weighted average of the two individual offsets. Scharnowski et al. applied transcranial magnetic stimulation (TMS) with latencies from onset of the first vernier ranging from 0 to 420ms. For TMS onset latencies from 45 to 120ms the second vernier dominated the fused percept; for latencies from 145 to 370ms the first vernier dominated the fused percept.

Figure 1.

Example stimulus presentation in the study by Scharnowski et al. (2009). In the study the two stimuli were presented to the same location on the retina.

Scharnowski et al. pointed out that, for that shift in perception to occur, information about each of the verniers must persist in the system for at least 370ms. Yet participants were able to report only the fused percept, and there was no evidence that either vernier was perceived as a separate entity at any time. Based in part on that finding, Michael Herzog et al. argued in a 2016 paper that conscious perception proceeded in frames of about 400ms.4 That is, following presentation of a stimulus, relevant information is combined into a package over a processing interval of 400ms and the package is then released at once as the products of perception.

In a 2020 paper, Michael Herzog et al. proposed a frame duration of ~450ms.5 This was based on the time span for the temporal integration of information about a rapid series of stimuli they found in a previous study.6 In fact, the value of 450ms was the longest individual mean they found. The range of individual means was from 290 to 450ms, with an overall mean of about 370ms. That matches the mean found by Scharnowski et al., so 370ms is perhaps a better estimate for the time span of a frame. If the newer paper by Herzog et al. is right, this estimate suggests that conscious perception is a stuttering progression of stills at a rate of two or three per second. How can that be the case if the temporal order of events separated by a few milliseconds can be accurately perceived?

The answer proposed by Herzog et al. in their 2016 paper is that the frame includes information about temporal order and duration. That is, a static frame lasting for 370ms can, for example, hold the information that event A lasted for 50ms and started 20ms after event B, which lasted for 10ms. Thus, temporal continuity and differentiation are perceived despite the occurrence of long static frames because temporal information is encoded into the body of perceptual information in the frame. That encoding of information must happen regardless of whether the frame hypothesis is valid or not, because time cannot be directly perceived. Consider space. A line that is 30cm long can be perceived as having that length, but the percept of the line is not itself 30cm long. It is information that the line is 30cm long. The length is a kind of meaningful label attached to the percept of the line. So it is with time. The percept of a stimulus as lasting for 50ms does not itself last for 50ms. More precisely, whatever duration the percept has is not tied to the duration of the stimulus. The duration is a kind of semantic label attached to the stimulus, just as the information about the length of the line is. So it is possible that, when a new frame is initiated, it could include information about the temporal durations and ordinal relations of all the events that fall into the frame. In effect, the apparent continuity in conscious perception is a kind of illusion generated by perceptual processes that label things in the recent past with temporal information.

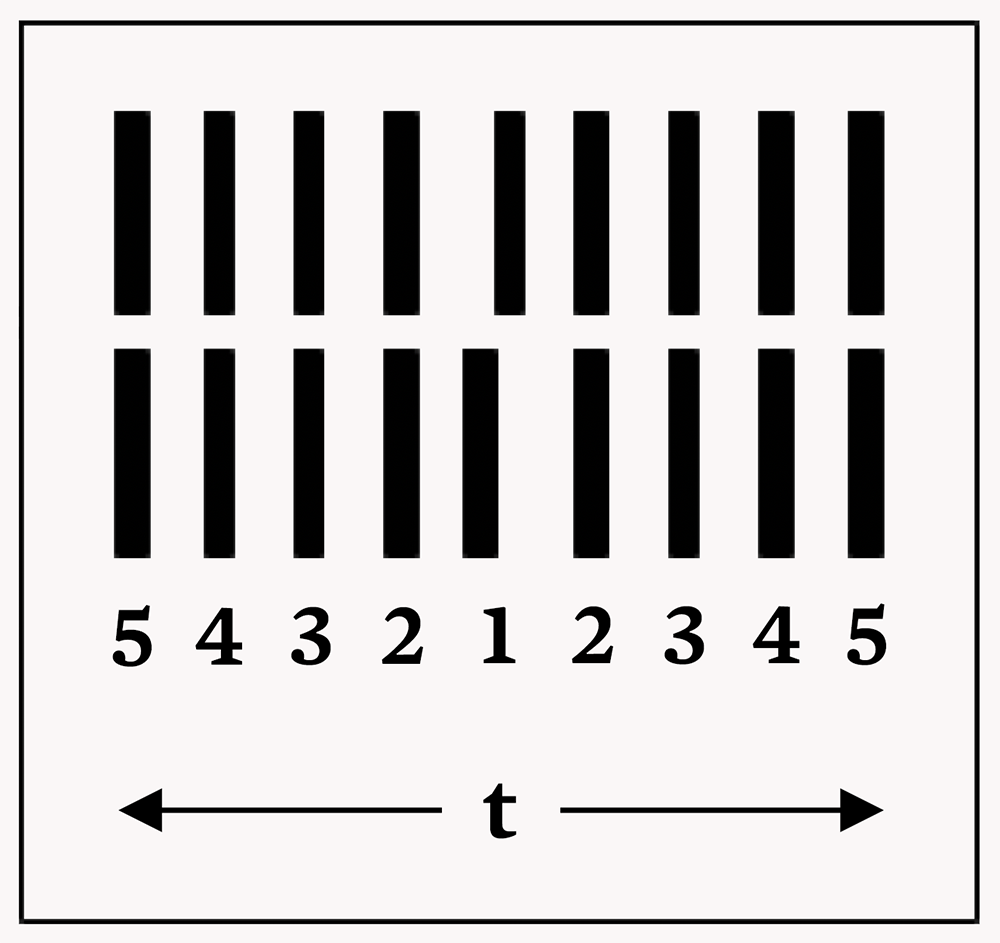

But there is another, more serious, problem for the 370ms frame hypothesis. The question that should be asked about the 370ms window is: why? More specifically, what processing function does it serve? A clue to this can be found in the way the stimuli are perceived in a 2019 paper by Leila Drissi-Daoudi et al. Figure 2 shows a simplified schematic version of the basic stimulus they used. The physical stimulus is a series of vertically aligned pairs of bars. Some of these are verniers, and a vernier is shown at position 1 in Figure 2. The pairs of bars are presented for short durations successively at close but separate physical locations: the numbers in Figure 2 indicate the order in which they appear. But the stimulus is not perceived as a series of separate objects, each one briefly popping up and disappearing again. Each side of the stimulus is perceived as a single object moving across the screen. That tells us that the perceptual processing system is constructing a single persisting perceptual object from a sequence of separate stimuli.

Constructing objects and distinguishing them from the rest of the perceptual environment is an important job in perceptual processing. Consider a ball that has been thrown in the air. Perceiving the ball seems quite natural and unproblematic. In fact, it is the outcome of complex and rapid perceptual processing that generates a set of information about the ball as a single, bounded object with features—e.g., color, shape, and so on—attached and integrated, persisting over time, and distinct from information about its visual environment.

Figure 2.

Simplified schematic rendition of a stimulus used by Drissi-Daoudi et al. for their 2019 study. Numbers under the pairs of bars indicate temporal order of presentation. Initially a vernier is presented—t1 in the figure. This is followed by a diverging sequence of pairs of vertically aligned bars. Eighteen were presented in total, but only the first four are shown in the figure.

Sometimes perceiving objects is not easy because they are temporarily occluded—think of observing the ball moving behind a set of railings—or because the stimulus information is at threshold level for detection, or ambiguous, or shrouded in what is essentially noise. Under such circumstances, the perceptual system attempts to resolve a perceptual object by integrating input over time. That is what is happening with the vernier stimuli. The verniers form a temporally and spatially ordered series, from which the perceptual system infers that they are connected, but there are gaps between them. Faced with that incompleteness, the perceptual system retains recent information and integrates it over time, and the percept of a single moving object emerges from that temporal integration process.

There are many kinds of temporal integration in the brain, all sharing the function of attempting to pin down perceptual objects and their features in the face of ambiguous, faint, incomplete, or noisy stimuli. But they do not all integrate over the same time span. Here are just three examples. If letters making up a word are presented at different times, the whole word can still be perceived so long as the time span of the stimulus presentation is no more than 80ms.7 If three consecutive stimuli in a set of eighteen presented in a rapid series form three of the corners of a square, they can be integrated into a percept of a broken square.8 That is temporal integration across 240ms. In a 2016 study by Ernest Greene, letter stimuli made of arrays of dots were presented.9 Dots were divided between two successive stimuli in such a way that a letter could not be identified from one part-stimulus alone, but could still be identified from the combination of the two parts. In Greene’s study, better than chance identification occurred if the part-stimuli were separated by durations up to 500ms, indicating some degree of temporal integration on that timescale.

Those studies show temporal integration over 80ms, 240ms, and 500ms. None of those integration times matches the 370ms time span found by Scharnowski et al. in 2009 and Drissi-Daoudi et al. in 2019.10 A time span of temporal integration for one kind of processing cannot support a hypothesis of a general time span for frames of conscious perception, simply because different kinds of processing have different time spans of integration. So there is no frame of conscious perception. There are just multiple temporal integration processes operating on different—and variable—timescales. The longest timescale that has been identified so far is about 3,000ms.11 Surely no one would propose that there is a frame of conscious perception with a time span of 3,000ms.

In his review, Rufin VanRullen argued for a temporal window of about 100ms. In support of this claim, he proposed that 100ms is “the approximate duration for which a stimulus can still have a strong retrospective impact on the perception of preceding events.” Readers might be wondering how a stimulus can have an effect on something that is in the past. Does the effect somehow go backwards in time? What happens is that information is maintained in perceptual processing for a short period of time after the stimulus that gave rise to it has terminated, and during that short period of time it is available for modification in light of subsequent input. A simple example is an apparent motion stimulus. Under some circumstances, if two stimuli are presented consecutively at different but nearby locations, what is perceived is a single object moving from the location of the first stimulus to that of the second. The percept of motion cannot be constructed until the second stimulus has been presented. When the second stimulus is presented, the history leading up to it is reconstructed in perception.

Information can only be postdictively modified for a short amount of time before it becomes unassailable, but VanRullen’s claim that maximum time is ~100ms is not correct. Several studies have shown postdictive modification on longer timescales: 200ms, 300ms, and 300–400ms.12 Of these, the second study, published by Sieu Khuu et al., merits a closer look. They presented five visual stimuli, brief flashes, three at one location and two at another. The reported location of the third flash, the last of those at the first location, was significantly displaced towards that of the fourth flash, the first one at the second location, even if the interval between the flashes was 300ms. Even though information about the third flash at its veridical location is in the perceptual system for 300ms, it is not perceived as being at that location. It is only perceived at the location that is postdictively interpolated when the fourth flash occurs. Khuu et al. made a case that the visual system constructs a plausible physical interpretation of the flashes as a single object moving smoothly from the first location to the second. Even though most of that motion is not perceived, the third flash is perceptually relocated to fit with the motion hypothesis that the visual system has adopted. It is a bit like perceiving a moving object through railings: the object is only visible at intervals, but its motion is still perceived as continuous. This result does not support the hypothesis of a time window of ~300ms in conscious perception. It is just another kind of temporal integration representing the visual system’s attempts to make plausible connections between separate stimuli.

The other kind of evidence VanRullen called on to support the 100ms frame hypothesis comes from two of his own papers.13 In 2014, he and his colleagues argued that evidence from EEG research supported a hypothesis of frames on a timescale of 7–13Hz, that is about 80–140ms. In 2016, he reviewed a large body of research on the effects of pre-stimulus oscillatory phase on perception, concluding that the research showed peaks at 7Hz and 11Hz, corresponding to timeframes of 140ms and 90ms.14 He argued that the latter reflected sensory and perceptual processing and the former reflected periodic attentive sampling. The hypothesized frames would be of a different kind from that proposed by Herzog et al. in 2016 and 2020 by virtue of being endogenous.15 That is, instead of being initiated by a stimulus, they run along continuously and independently of stimulation, thereby creating the periodicity detected in the EEG recording. Thus, although VanRullen proposed a hierarchy of stages in conscious perception as part of his review, the exogenous frames proposed by Herzog et al. in 2016 and Drissi-Daoudi et al. in 2019 would not be synchronized with the endogenous frames proposed by VanRullen himself in 2016. It is hard to see how they could have a functional hierarchical connection.

There is evidence for many timescales of periodicity in EEG. Any one of them could, in principle, function as a generator of frames of conscious perception. So why would the 7Hz cycle be favored for this? The argument would be that attention ebbs and flows on that timescale, so that new perceptual information is attentively registered at one phase of the cycle, when the gate to attentive processing is maximally open, but not at the opposite phase of the cycle, when the gate is closed. Thus, perception is attentively updated only when attention is maximally engaged with the stimulus, and that happens once every 140ms approximately. That hypothesis predicts numerous effects on processing of the pre-stimulus phase of oscillations. A supplementary table provided in VanRullen in his 2016 paper lists many studies that have sought that kind of evidence.16 As he writes, effects on perception were found for oscillatory frequencies ranging from 1Hz to 30Hz, “but without any apparent logic relating frequency to perceptual or cognitive function.”17 Even more problematic, the proportion of variability in perception that was explained by oscillatory phase was around 10 to 20%. In his review, VanRullen now refers to “the metaphorical camera shutter closing every 100ms or so,” but in fact the shutter does not close; it just lets through about 20% less information. Even if oscillatory phase is an indicator of attentive gating, the gate is very far from being closed at any time in the cycle. The evidence, therefore, does not support the hypothesis of a clear and absolute boundary between one frame and the next. On the contrary, there are just minor periodic fluctuations in receptivity to information.

Are there frames of conscious perception? It remains possible, but the evidence currently favors a view that there are many kinds of processes in perception, each integrating or summating information on independent timescales. When a given process finishes, its product is entered into conscious perception. Different products of different processes are entered into conscious perception piecemeal. There is no overall synchrony in that.